Experiments

Experiments

Experiments

tests and side projects exploring new boundaries of creative practice.

“Through reproduction from one medium into another the real becomes volatile, it becomes the allegory of death, but it also draws strength from its own destruction, becoming the real for its own sake, a fetishism of the lost object which is no longer the object of representation, but the ecstasy of the degeneration and its own ritual extermination: the hyperreal.”

—Jean Baudrillard, Symbolic Exchange and Death

“Through reproduction from one medium into another the real becomes volatile, it becomes the allegory of death, but it also draws strength from its own destruction, becoming the real for its own sake, a fetishism of the lost object which is no longer the object of representation, but the ecstasy of the degeneration and its own ritual extermination: the hyperreal.”

—Jean Baudrillard, Symbolic Exchange and Death

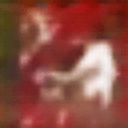

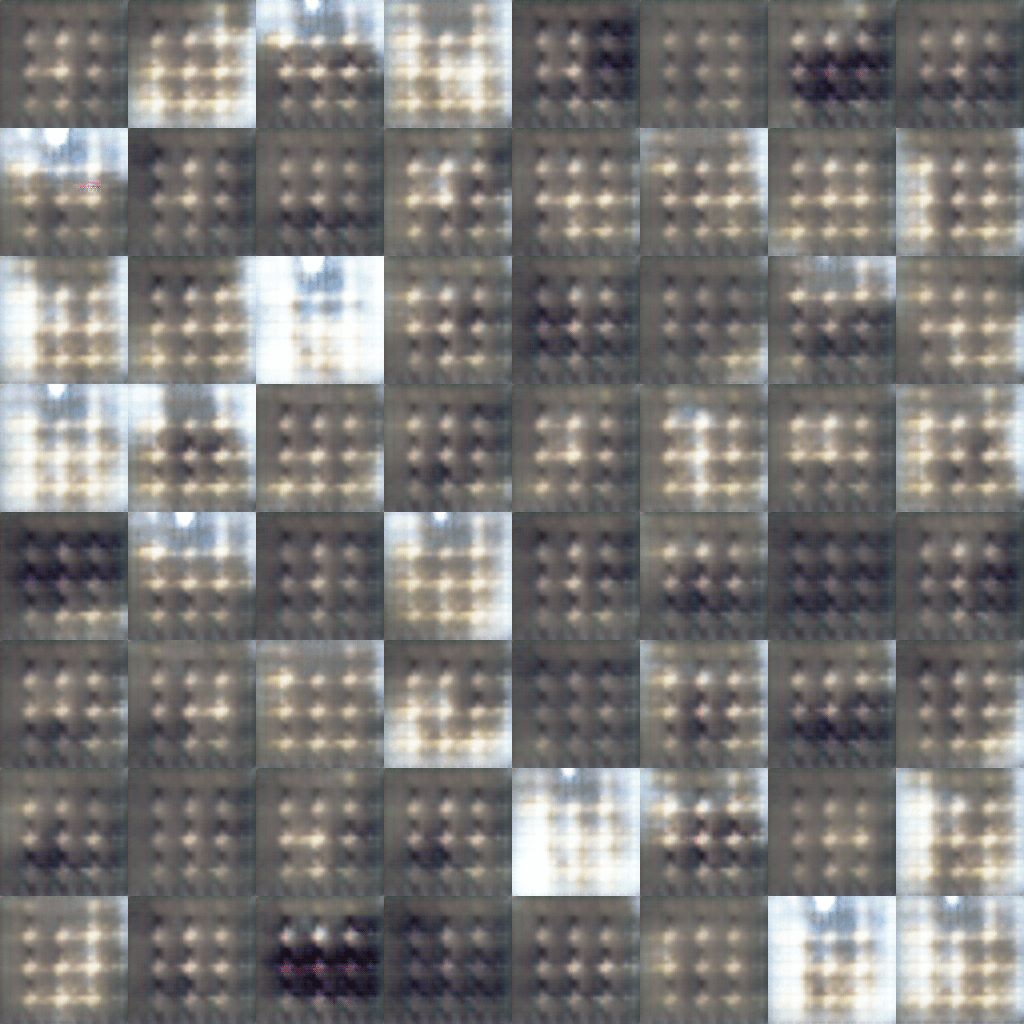

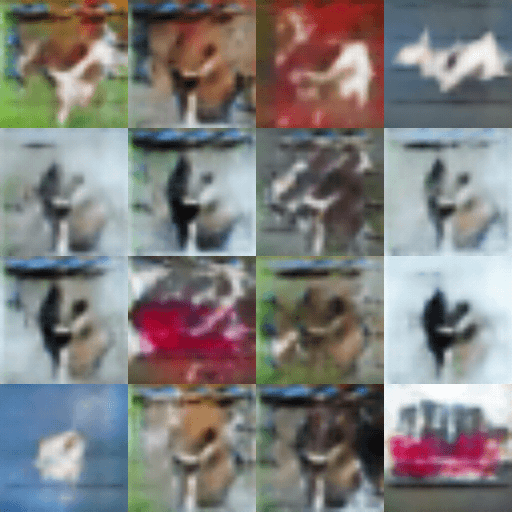

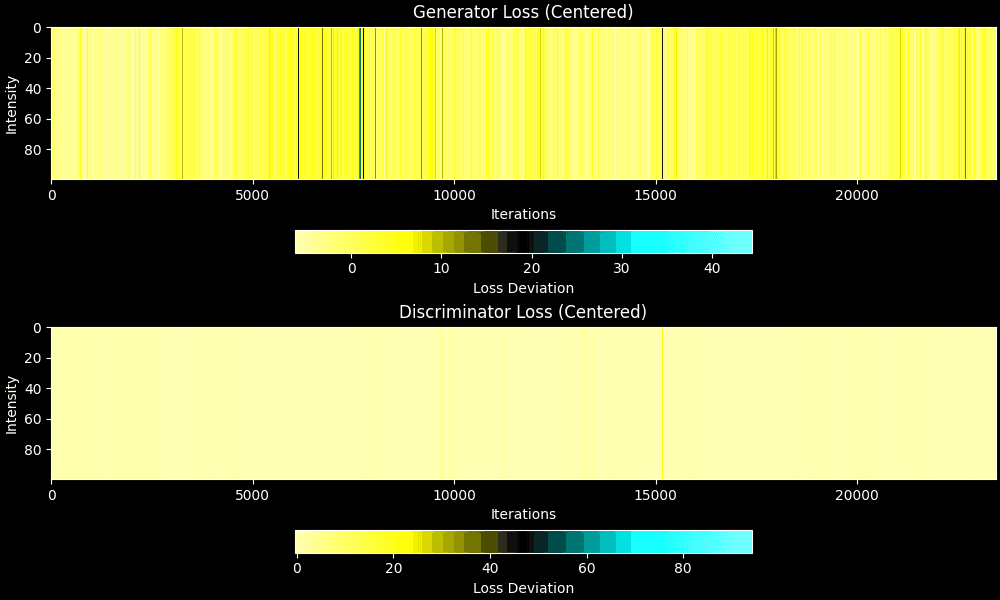

DcGAN Project

This work employs machine learning techniques to perform style transfer between scraped art datasets and private photo albums, generating a series of 64x64 pixel matrices. The computational process simulates physical weathering, diminishing image recognisability while effectively stripping visual metadata. The algorithm obscures semantic clarity, dissolves specific intimacy, and endows the output with the characteristics of an art collection.

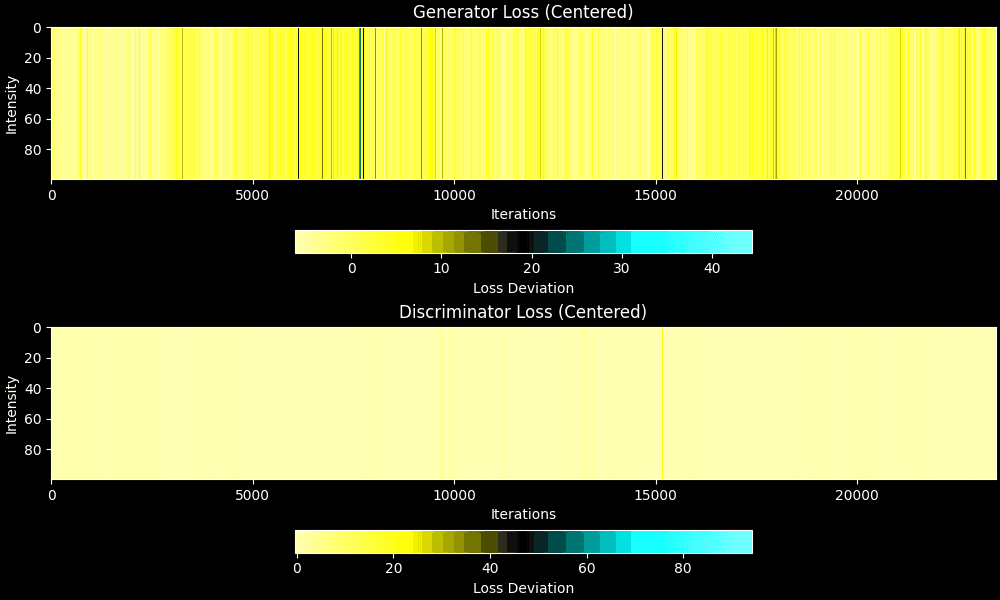

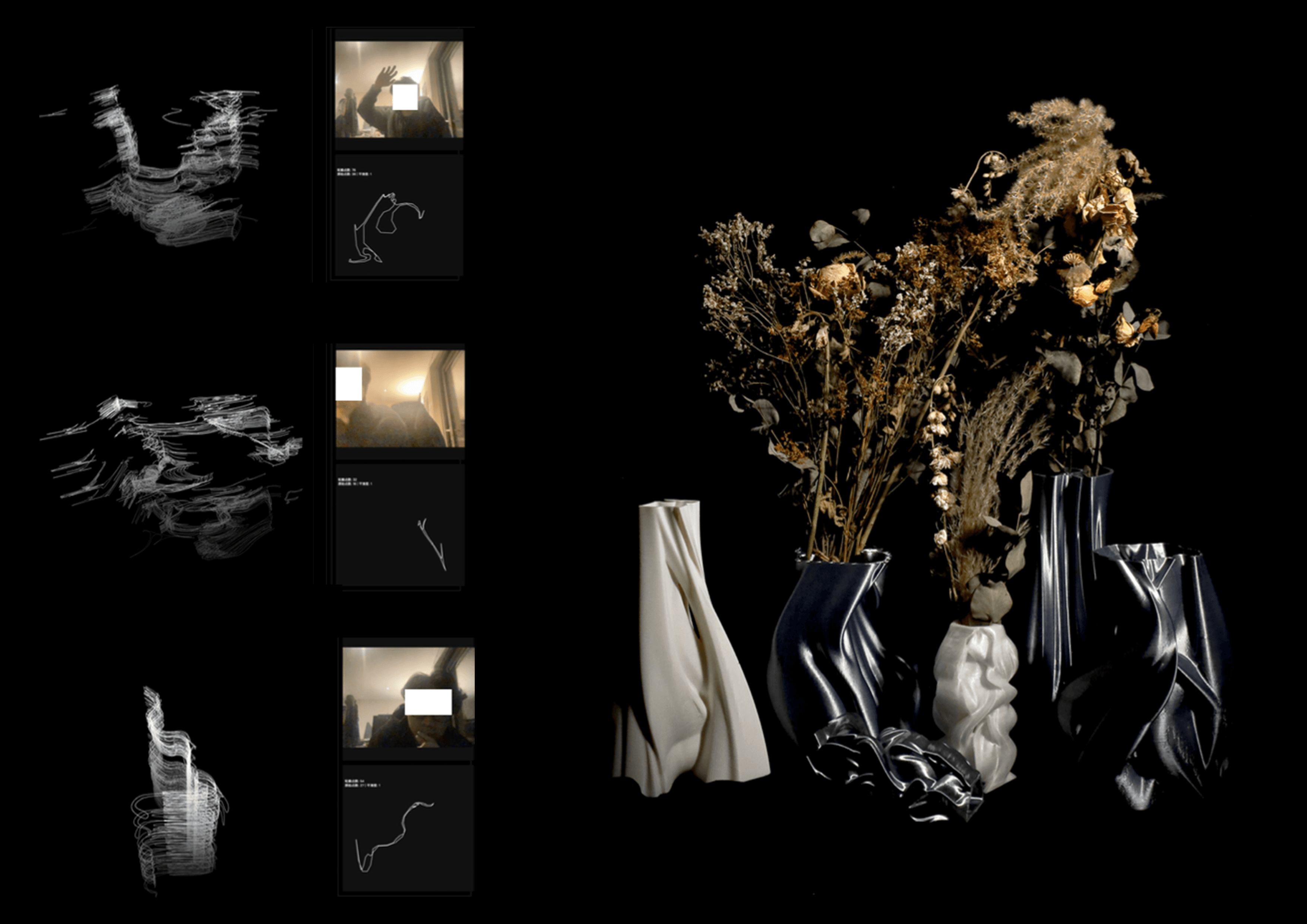

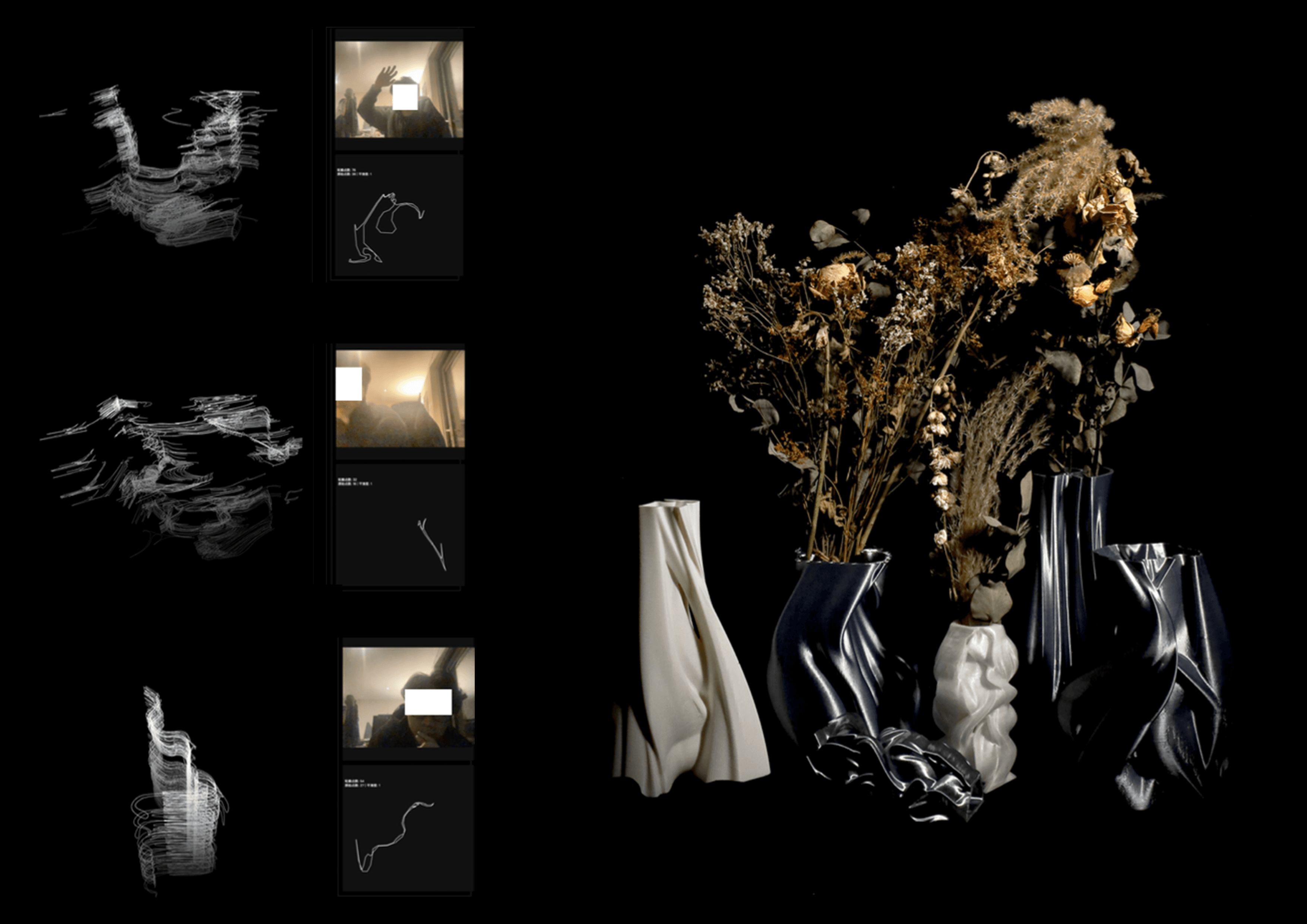

Fleeting scenes from life are translated into tangible vessels.

This project explores the transformative relationship between motion, time and material form. Through custom computer vision algorithms, the programme captures real-time dynamic footage from everyday life and extracts its edge contours. Under the algorithm's gaze, time ceases to flow linearly and is recoded into a vertical spatial dimension. Every fleeting movement before the camera—be it a waving hand or a passing shadow—is sliced and layered sequentially. These successive temporal slices undergo smoothing algorithms, ultimately lofting into organically shaped digital vases.

Fleeting scenes from life are translated into tangible vessels.

This project explores the transformative relationship between motion, time and material form. Through custom computer vision algorithms, the programme captures real-time dynamic footage from everyday life and extracts its edge contours. Under the algorithm's gaze, time ceases to flow linearly and is recoded into a vertical spatial dimension. Every fleeting movement before the camera—be it a waving hand or a passing shadow—is sliced and layered sequentially. These successive temporal slices undergo smoothing algorithms, ultimately lofting into organically shaped digital vases.

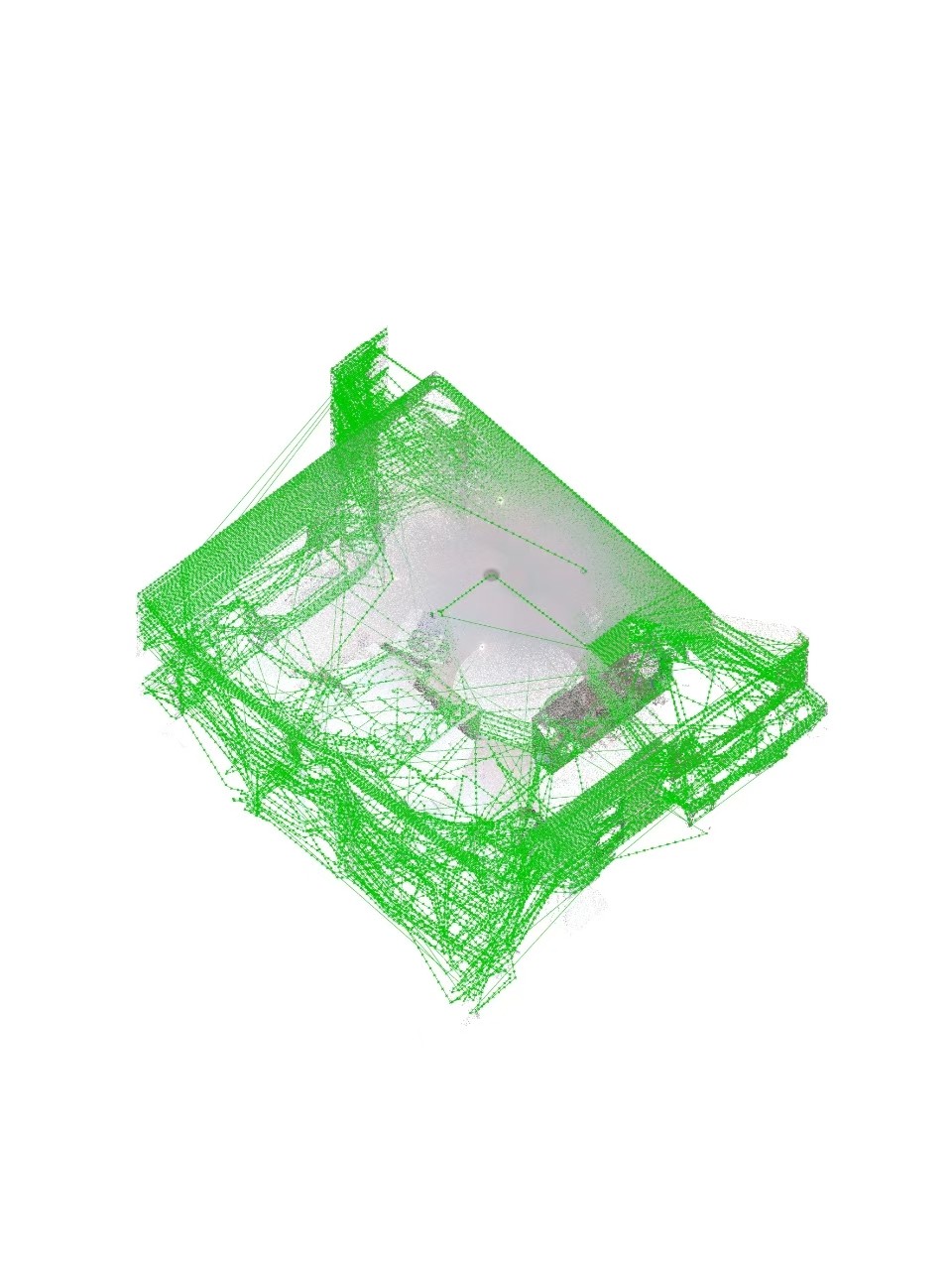

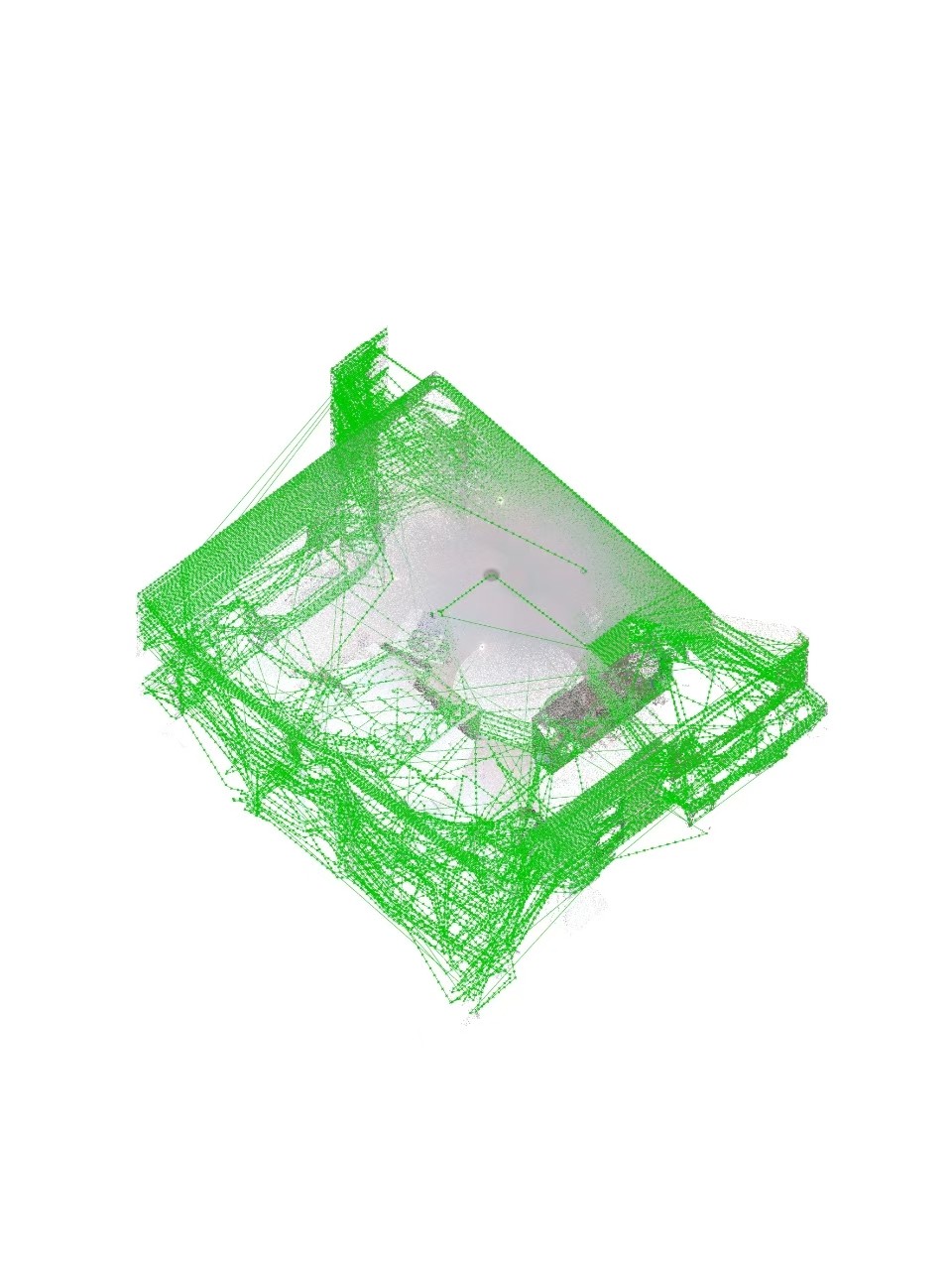

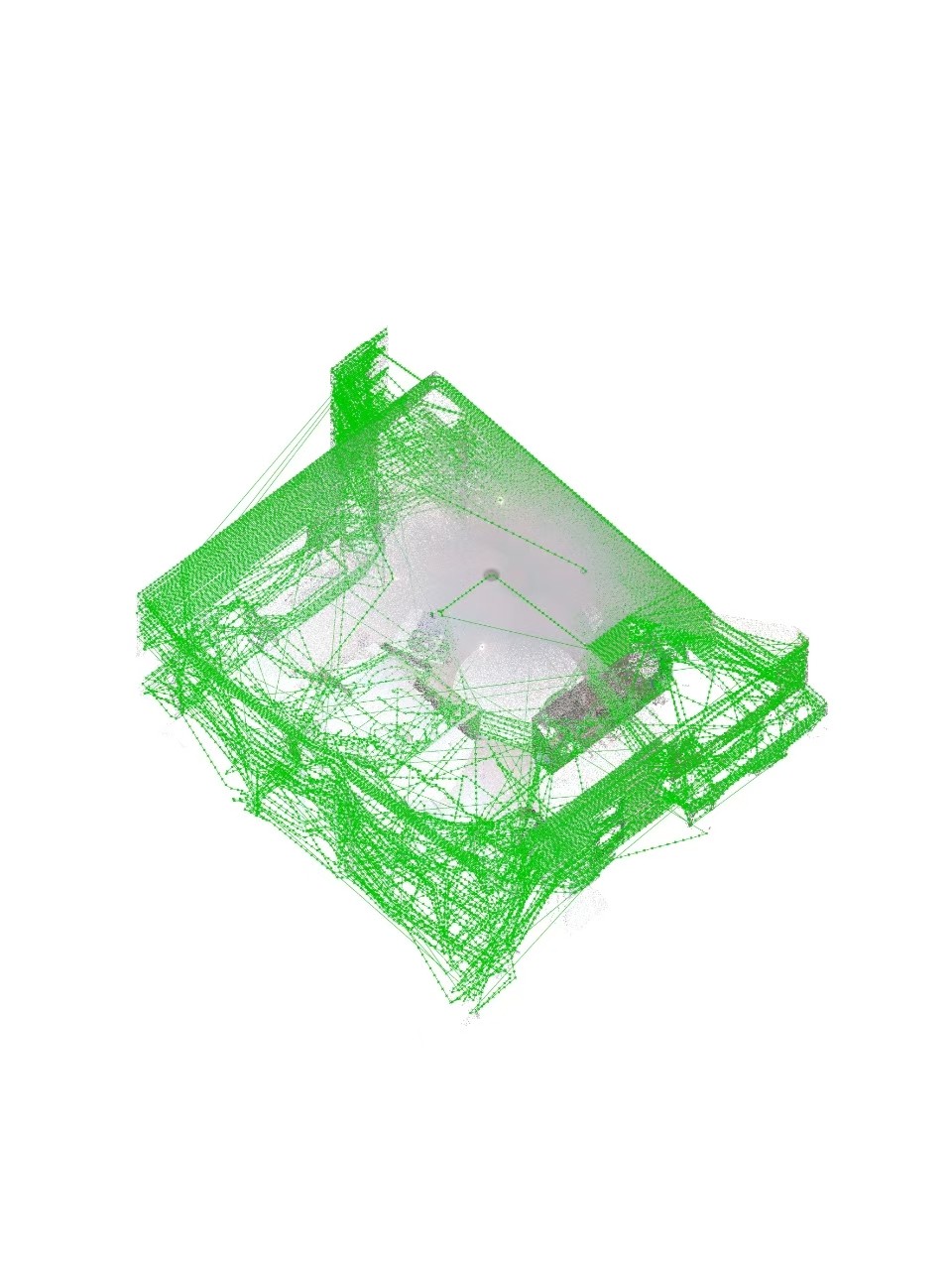

Generating G-code for 3D printing by slicing point clouds from LIDAR data

This project investigates the “conversion error” between digital perception and physical fabrication. By circumventing standard mesh generation processes, I directly slice raw laser-scanned point clouds of rooms into “corrupted” G-code, compelling 3D printers to interpret unstructured data. Deprived of a solid mesh for guidance, the printer attempts to materialise floating coordinates, yielding chaotic, tangled structures. The original spatial scan becomes utterly transformed, ultimately reduced to abstract printed artefacts.

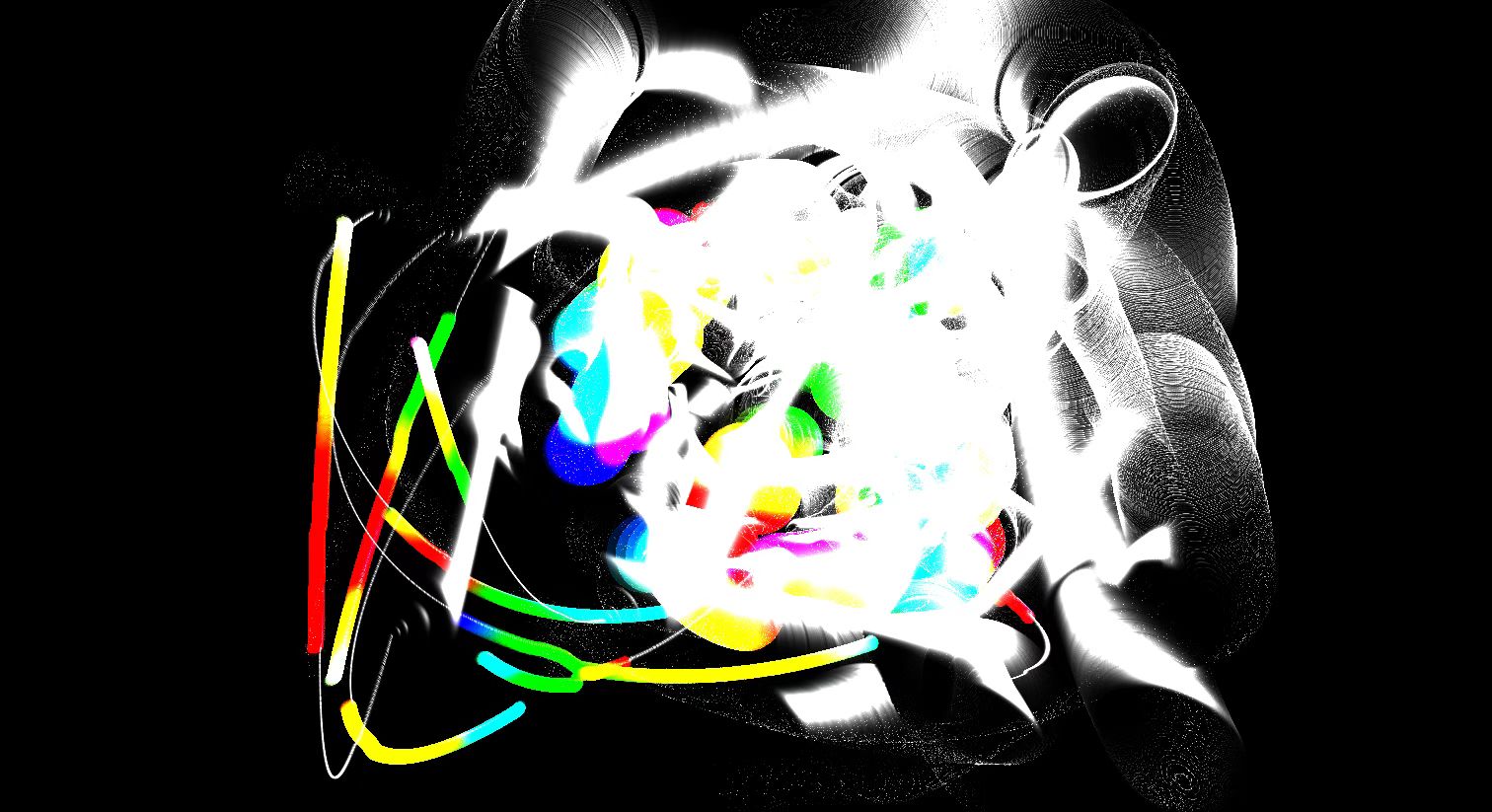

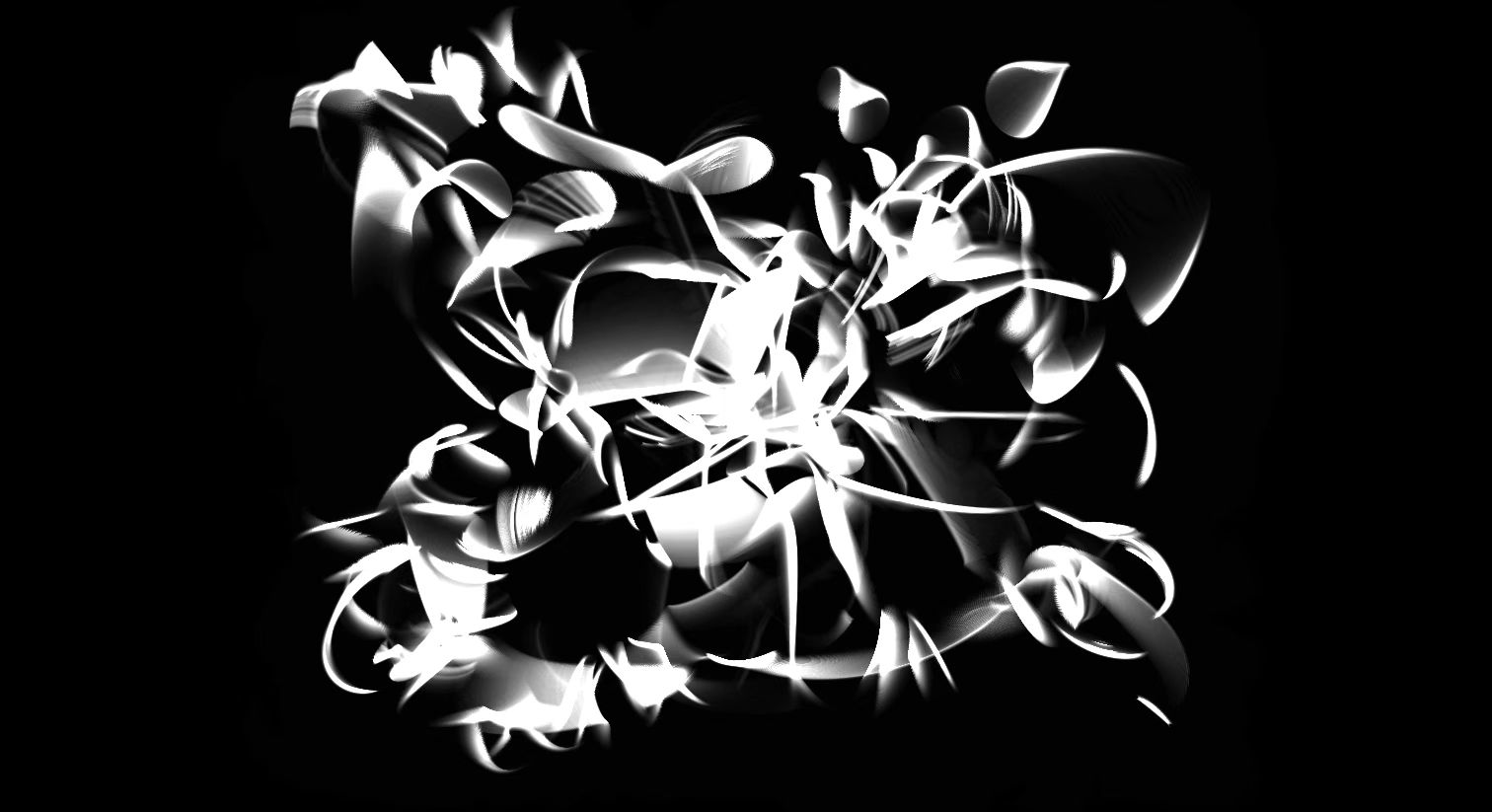

co-drawing machine

Co-drawing Machine is an artistic experiment exploring the concept of ‘relinquishing control’. Within this generative drawing system, the tool ceases to be a passive object in the artist's hands, instead becoming a collaborative partner governed by its own autonomous movement logic.

The programme constructs an organic fluid system based on mathematical chaos (Metaballs), which traverse the canvas or camera feed along trajectories defined by trigonometric functions. The creator cannot precisely dictate the landing point of each stroke, but can only, like a shepherd, interfere with and guide these digital creatures through the repulsive force field generated by the mouse. Through frame buffer feedback technology, the passage of time is recorded as spatial trajectories. The final artwork is an irreproducible trace left by the interplay and collaboration between human intent and machine algorithms within uncertainty.

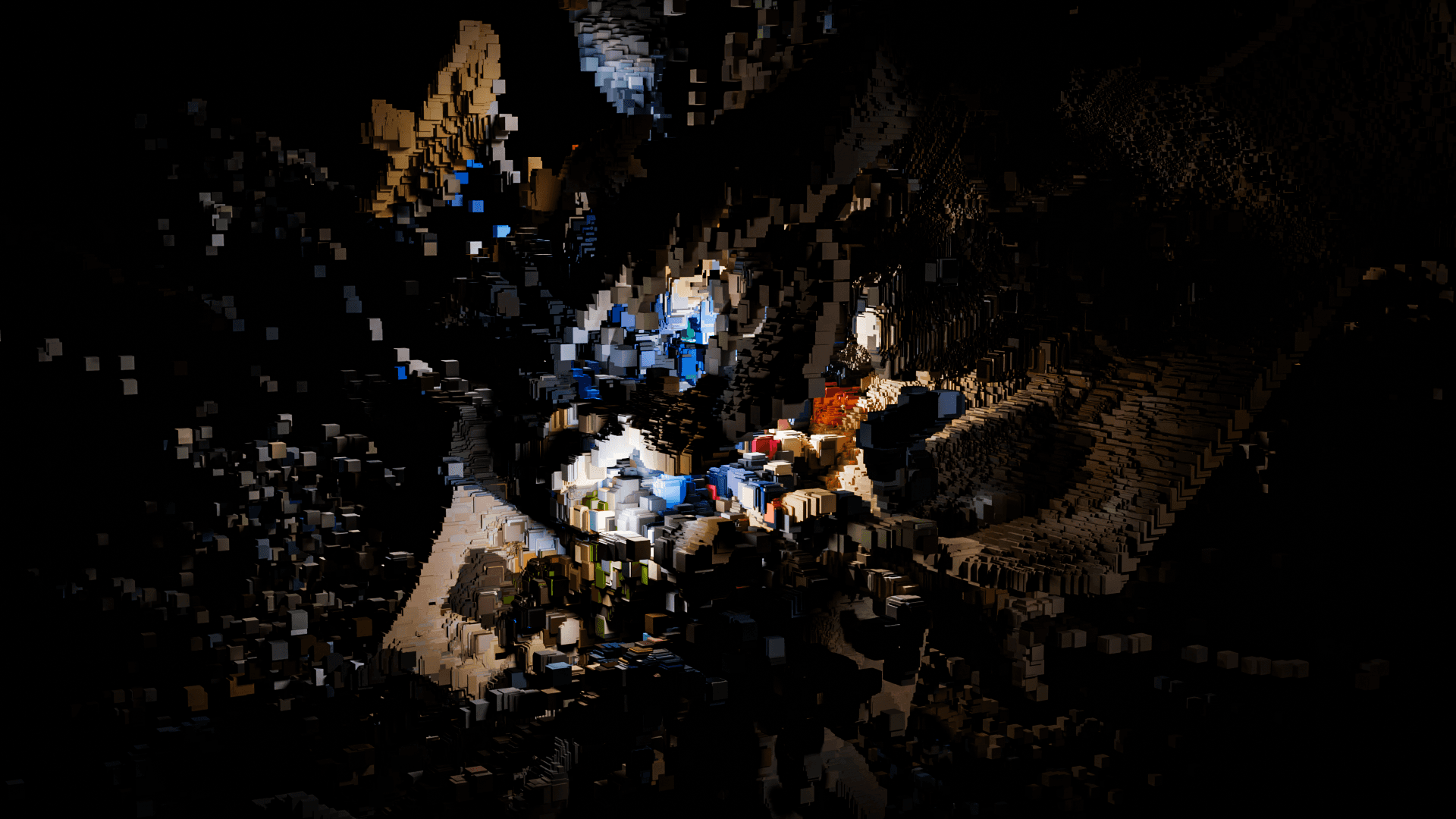

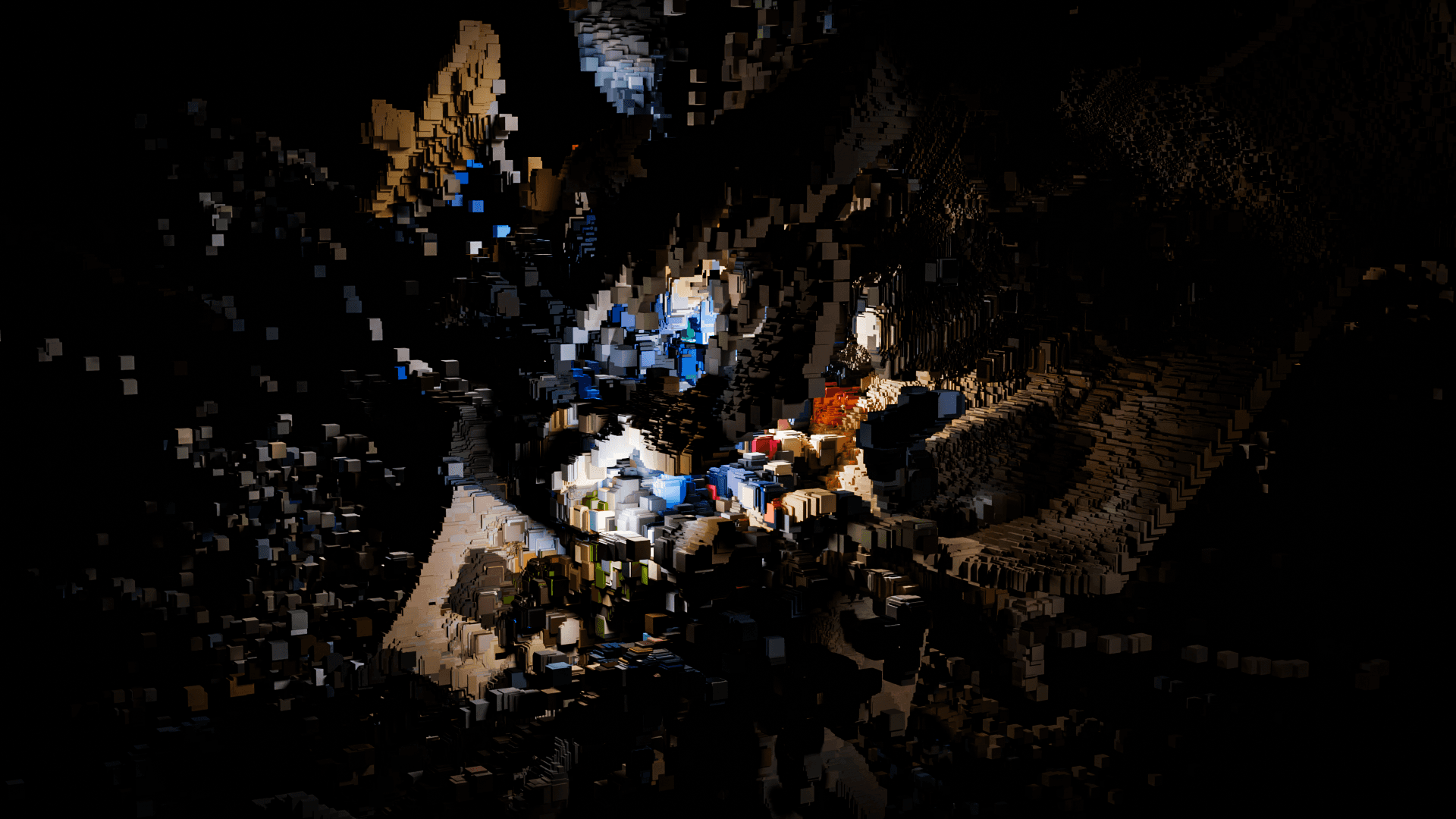

gaussian splatting test 01

This side project explores how scanned geometry can be reinterpreted through voxelisation and Gaussian splatting as an alternative rendering strategy. Starting from a 3D scan of a simple ellipsoid form, I wrote a small custom program to convert the raw point data into a voxel grid, treating each voxel as a discrete density field rather than a conventional mesh surface.

gaussian splatting test 01

This side project explores how scanned geometry can be reinterpreted through voxelisation and Gaussian splatting as an alternative rendering strategy. Starting from a 3D scan of a simple ellipsoid form, I wrote a small custom program to convert the raw point data into a voxel grid, treating each voxel as a discrete density field rather than a conventional mesh surface.

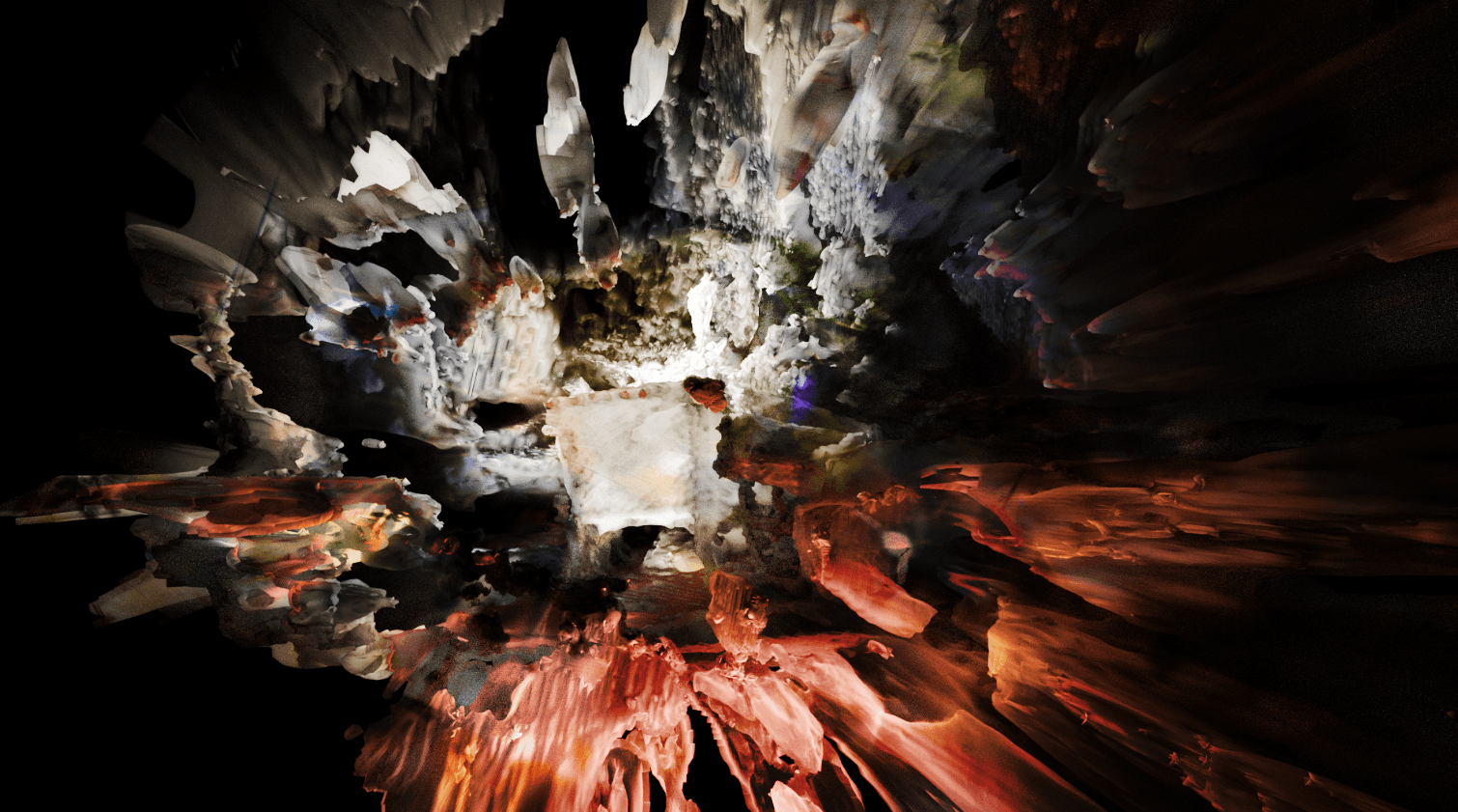

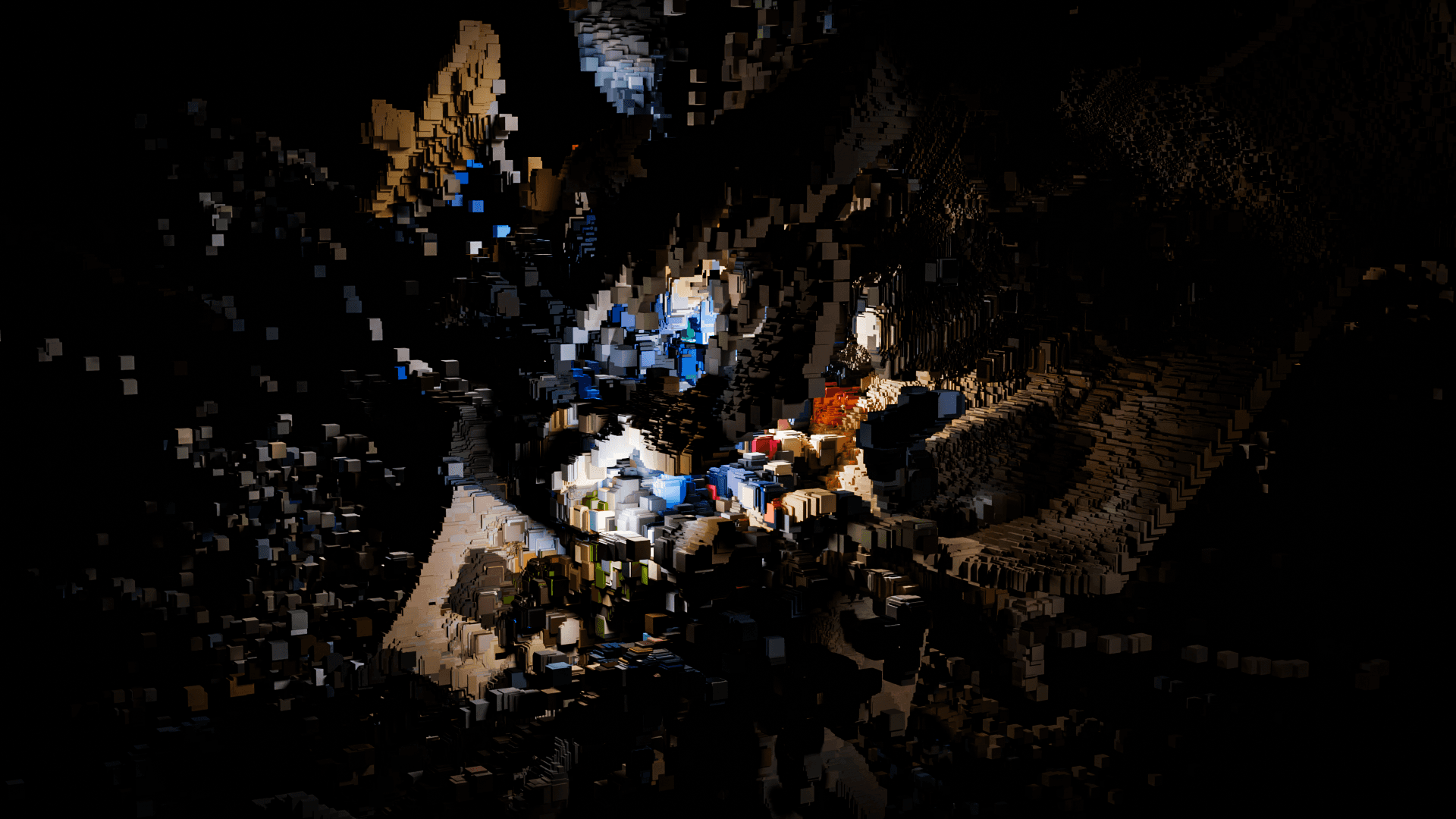

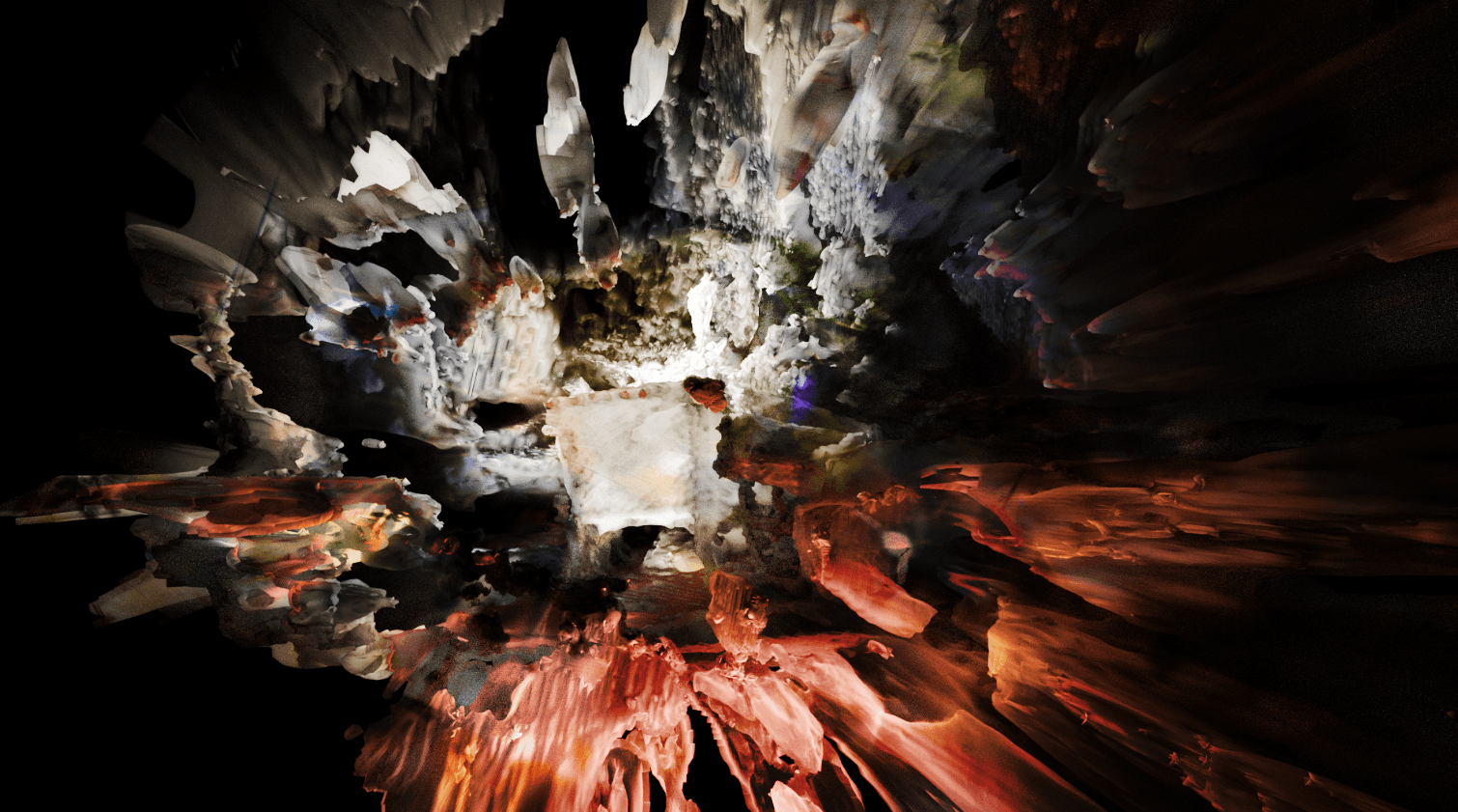

gaussian splatting test 02

This experiment explores how to endow Gaussian splatter techniques with materiality through Poisson recon

gaussian splatting test 02

This experiment explores how to endow Gaussian splatter techniques with materiality through Poisson recon.

wearable point cloud

Using a parametric algorithm, this project generates a transparent facial mask from point-cloud data. The script samples the facial point cloud and traces flows across key features such as the eyes, nose, cheekbones and jawline, producing a mask defined by two layers of information: the original facial geometry and the algorithmic flow pattern. The resulting form is a thin, translucent shell that can be realised through resin or PolyJet 3D printing, functioning as a data-derived “second skin” rather than a direct reconstruction of the face.

wearable point cloud

Using a parametric algorithm, this project generates a transparent facial mask from point-cloud data. The script samples the facial point cloud and traces flows across key features such as the eyes, nose, cheekbones and jawline, producing a mask defined by two layers of information: the original facial geometry and the algorithmic flow pattern. The resulting form is a thin, translucent shell that can be realised through resin or PolyJet 3D printing, functioning as a data-derived “second skin” rather than a direct reconstruction of the face.

Lumetric Viewer V1

This project is a phone-based visualisation tool that applies real-time distortions to captured scenes using a Lumentric RGBI (RGB + intensity) pipeline. The camera feed is processed on-device and converted into alternative spatial representations rather than conventional images. The tool operates in two primary modes: Point Cloud Mode, which streams the scene as a live point cloud with per-point RGBI data, and Mesh Mode, which reconstructs a triangulated surface from the same input. In both cases, the output can be exported as PLY or PTS files, allowing the recorded data to be integrated into downstream workflows such as 3D modelling, simulation or computational design.

Lumetric Viewer V1

This project is a phone-based visualisation tool that applies real-time distortions to captured scenes using a Lumentric RGBI (RGB + intensity) pipeline. The camera feed is processed on-device and converted into alternative spatial representations rather than conventional images. The tool operates in two primary modes: Point Cloud Mode, which streams the scene as a live point cloud with per-point RGBI data, and Mesh Mode, which reconstructs a triangulated surface from the same input. In both cases, the output can be exported as PLY or PTS files, allowing the recorded data to be integrated into downstream workflows such as 3D modelling, simulation or computational design.

Lumetric Viewer V2

Based on the Lumentric Viewer V1 framework, this version adds an image-to-3D conversion pipeline that generates abstract Lumentric spaces from static inputs.Users can upload 2D images, which are then processed into a 3D field using a parameterised mapping between pixel position, colour values and intensity. The pipeline interprets the image in Lumentric space (RGBI) and reconstructs it either as a dispersed point distribution or a continuous volume, depending on the chosen settings. Key parameters such as sampling density, depth mapping function and intensity falloff can be adjusted to control the degree of abstraction and spatial deformation.The resulting 3D output can be inspected in real time within the viewer and exported for downstream use in modelling or rendering workflows, forming the basis for developing and testing visualization for my design brand, CHOGA.

DcGAN Project

This work employs machine learning techniques to perform style transfer between scraped art datasets and private photo albums, generating a series of 64x64 pixel matrices. The computational process simulates physical weathering, diminishing image recognisability while effectively stripping visual metadata. The algorithm obscures semantic clarity, dissolves specific intimacy, and endows the output with the characteristics of an art collection.

Fleeting scenes from life are translated into tangible vessels.

This project explores the transformative relationship between motion, time and material form. Through custom computer vision algorithms, the programme captures real-time dynamic footage from everyday life and extracts its edge contours. Under the algorithm's gaze, time ceases to flow linearly and is recoded into a vertical spatial dimension. Every fleeting movement before the camera—be it a waving hand or a passing shadow—is sliced and layered sequentially. These successive temporal slices undergo smoothing algorithms, ultimately lofting into organically shaped digital vases.

Fleeting scenes from life are translated into tangible vessels.

This project explores the transformative relationship between motion, time and material form. Through custom computer vision algorithms, the programme captures real-time dynamic footage from everyday life and extracts its edge contours. Under the algorithm's gaze, time ceases to flow linearly and is recoded into a vertical spatial dimension. Every fleeting movement before the camera—be it a waving hand or a passing shadow—is sliced and layered sequentially. These successive temporal slices undergo smoothing algorithms, ultimately lofting into organically shaped digital vases.

Generating G-code for 3D printing by slicing point clouds from LIDAR data

This project investigates the “conversion error” between digital perception and physical fabrication. By circumventing standard mesh generation processes, I directly slice raw laser-scanned point clouds of rooms into “corrupted” G-code, compelling 3D printers to interpret unstructured data. Deprived of a solid mesh for guidance, the printer attempts to materialise floating coordinates, yielding chaotic, tangled structures. The original spatial scan becomes utterly transformed, ultimately reduced to abstract printed artefacts.

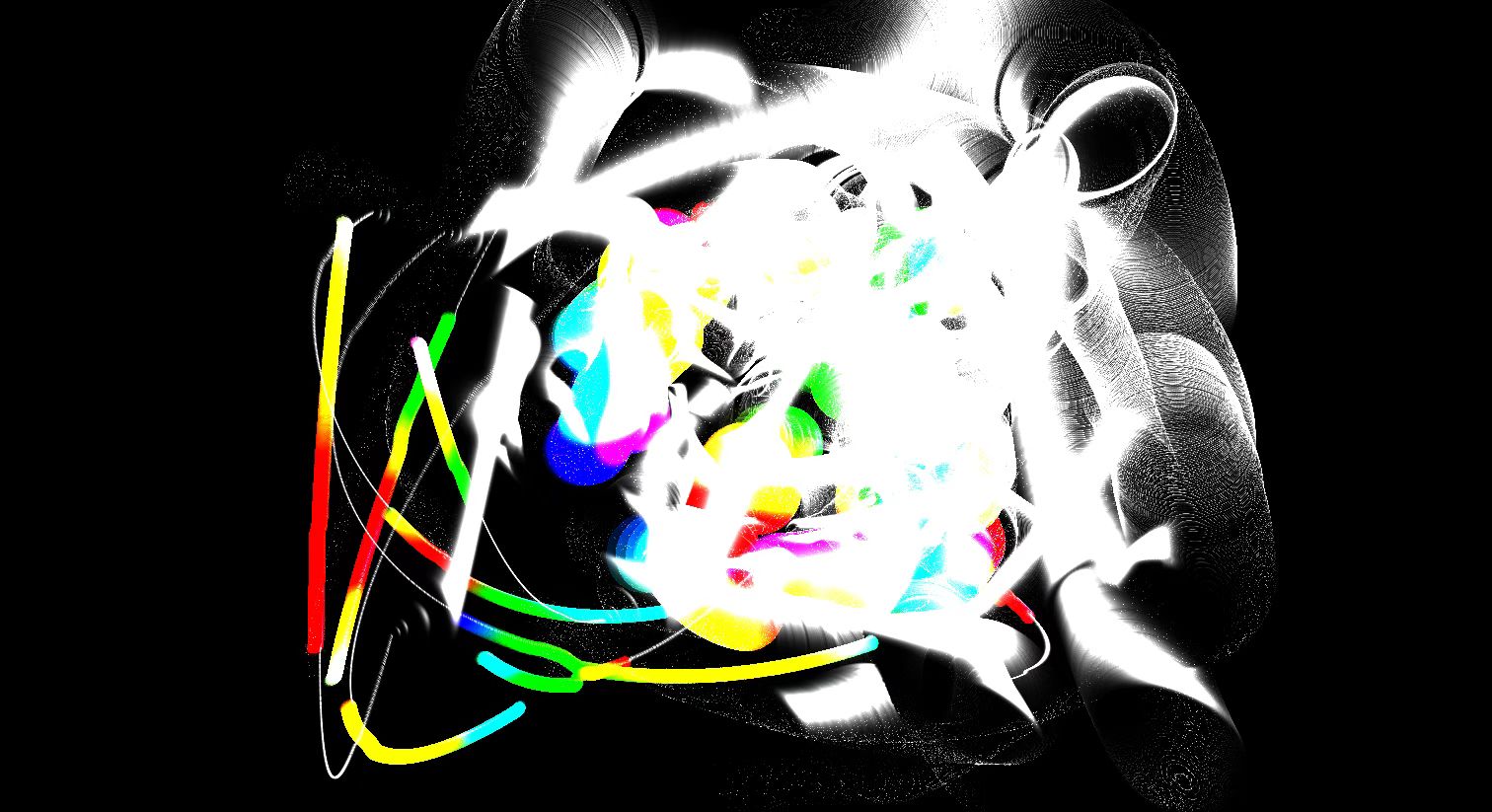

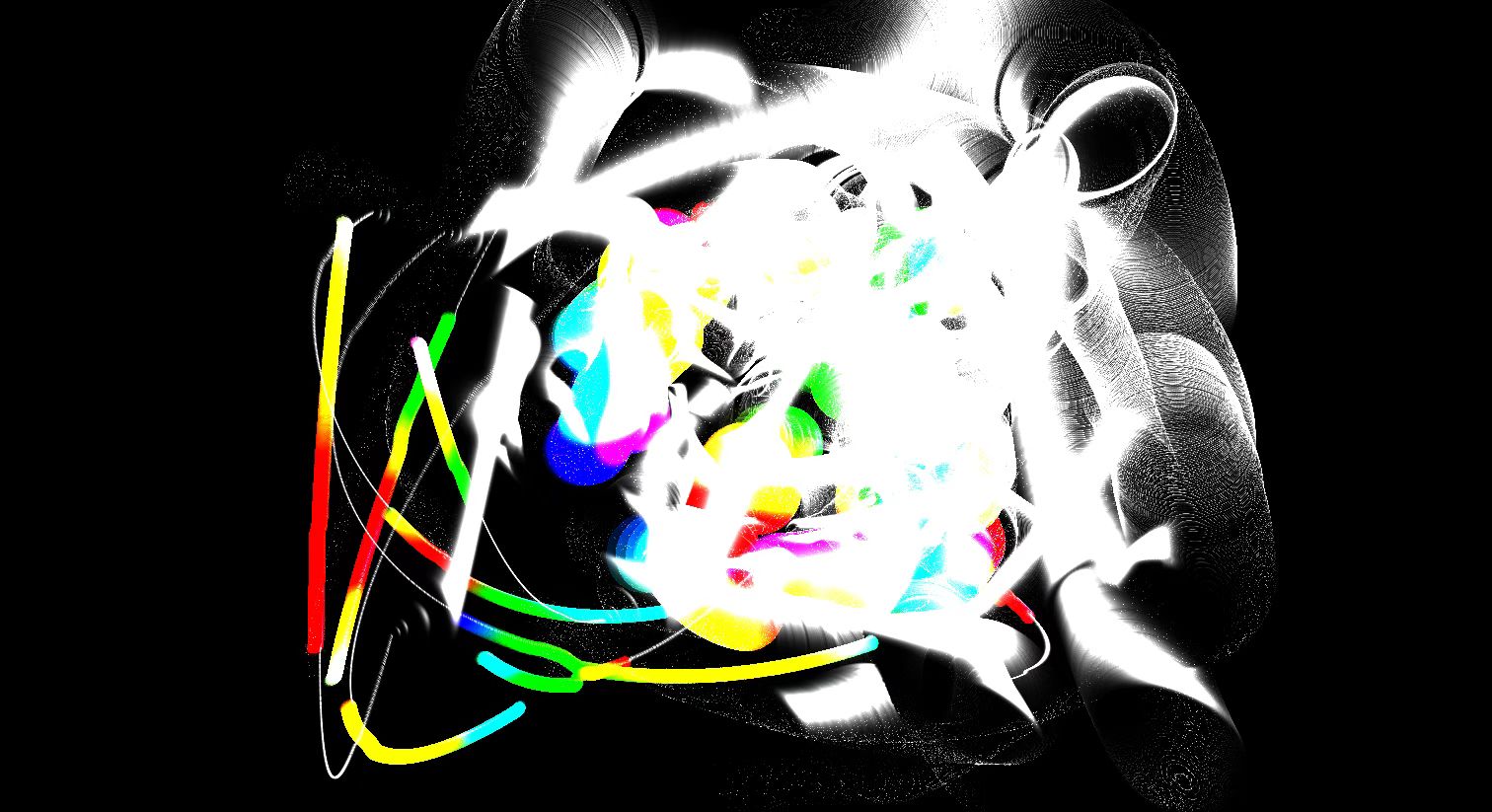

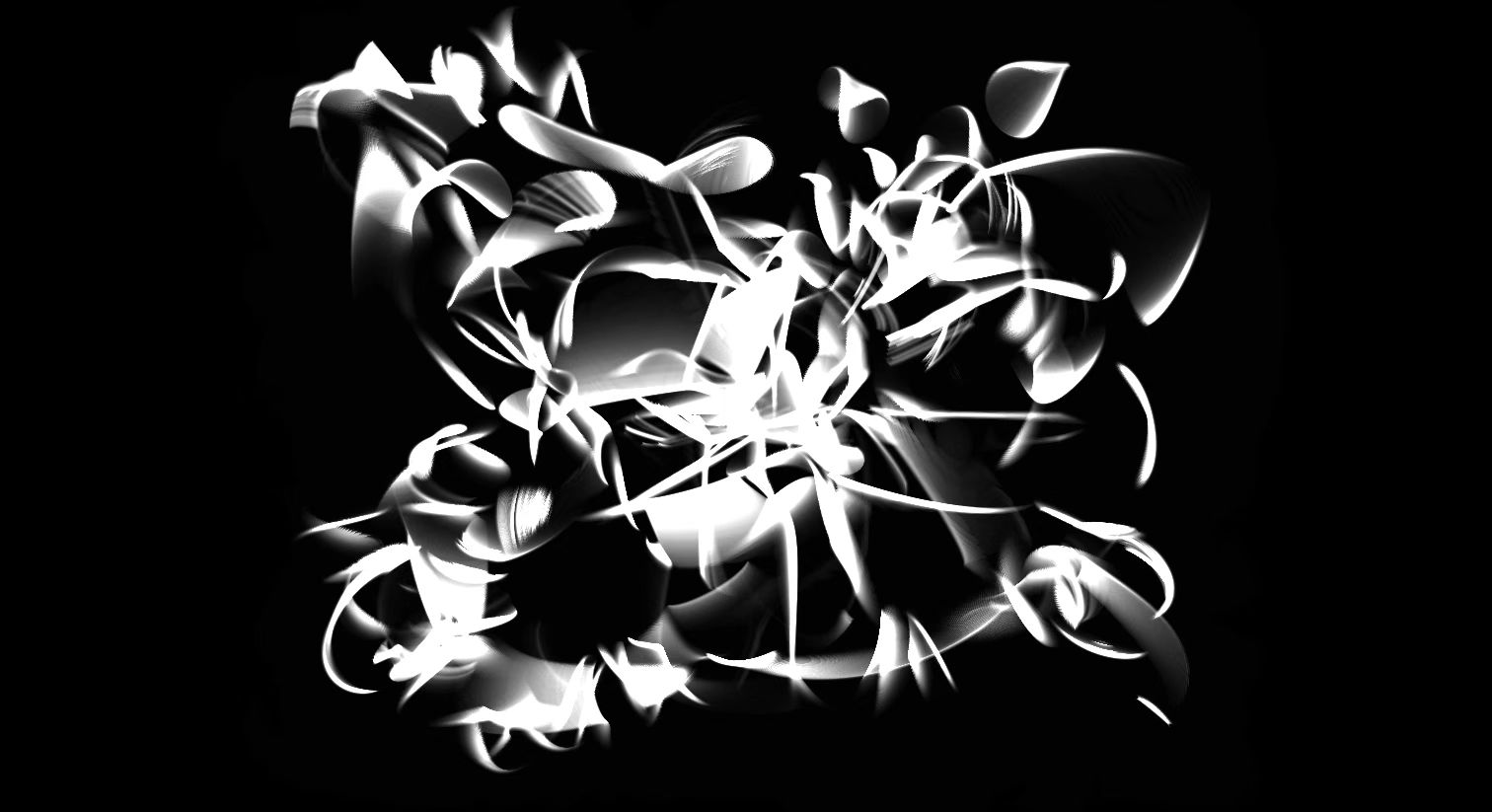

co-drawing machine

Co-drawing Machine is an artistic experiment exploring the concept of ‘relinquishing control’. Within this generative drawing system, the tool ceases to be a passive object in the artist's hands, instead becoming a collaborative partner governed by its own autonomous movement logic.

The programme constructs an organic fluid system based on mathematical chaos (Metaballs), which traverse the canvas or camera feed along trajectories defined by trigonometric functions. The creator cannot precisely dictate the landing point of each stroke, but can only, like a shepherd, interfere with and guide these digital creatures through the repulsive force field generated by the mouse. Through frame buffer feedback technology, the passage of time is recorded as spatial trajectories. The final artwork is an irreproducible trace left by the interplay and collaboration between human intent and machine algorithms within uncertainty.

gaussian splatting test 01

This side project explores how scanned geometry can be reinterpreted through voxelisation and Gaussian splatting as an alternative rendering strategy. Starting from a 3D scan of a simple ellipsoid form, I wrote a small custom program to convert the raw point data into a voxel grid, treating each voxel as a discrete density field rather than a conventional mesh surface.

gaussian splatting test 01

This side project explores how scanned geometry can be reinterpreted through voxelisation and Gaussian splatting as an alternative rendering strategy. Starting from a 3D scan of a simple ellipsoid form, I wrote a small custom program to convert the raw point data into a voxel grid, treating each voxel as a discrete density field rather than a conventional mesh surface.

gaussian splatting test 02

This experiment explores how to endow Gaussian splatter techniques with materiality through Poisson recon

gaussian splatting test 02

This experiment explores how to endow Gaussian splatter techniques with materiality through Poisson recon.

wearable point cloud

Using a parametric algorithm, this project generates a transparent facial mask from point-cloud data. The script samples the facial point cloud and traces flows across key features such as the eyes, nose, cheekbones and jawline, producing a mask defined by two layers of information: the original facial geometry and the algorithmic flow pattern. The resulting form is a thin, translucent shell that can be realised through resin or PolyJet 3D printing, functioning as a data-derived “second skin” rather than a direct reconstruction of the face.

wearable point cloud

Using a parametric algorithm, this project generates a transparent facial mask from point-cloud data. The script samples the facial point cloud and traces flows across key features such as the eyes, nose, cheekbones and jawline, producing a mask defined by two layers of information: the original facial geometry and the algorithmic flow pattern. The resulting form is a thin, translucent shell that can be realised through resin or PolyJet 3D printing, functioning as a data-derived “second skin” rather than a direct reconstruction of the face.

Lumetric Viewer V1

This project is a phone-based visualisation tool that applies real-time distortions to captured scenes using a Lumentric RGBI (RGB + intensity) pipeline. The camera feed is processed on-device and converted into alternative spatial representations rather than conventional images. The tool operates in two primary modes: Point Cloud Mode, which streams the scene as a live point cloud with per-point RGBI data, and Mesh Mode, which reconstructs a triangulated surface from the same input. In both cases, the output can be exported as PLY or PTS files, allowing the recorded data to be integrated into downstream workflows such as 3D modelling, simulation or computational design.

Lumetric Viewer V1

This project is a phone-based visualisation tool that applies real-time distortions to captured scenes using a Lumentric RGBI (RGB + intensity) pipeline. The camera feed is processed on-device and converted into alternative spatial representations rather than conventional images. The tool operates in two primary modes: Point Cloud Mode, which streams the scene as a live point cloud with per-point RGBI data, and Mesh Mode, which reconstructs a triangulated surface from the same input. In both cases, the output can be exported as PLY or PTS files, allowing the recorded data to be integrated into downstream workflows such as 3D modelling, simulation or computational design.

Lumetric Viewer V2

Based on the Lumentric Viewer V1 framework, this version adds an image-to-3D conversion pipeline that generates abstract Lumentric spaces from static inputs.Users can upload 2D images, which are then processed into a 3D field using a parameterised mapping between pixel position, colour values and intensity. The pipeline interprets the image in Lumentric space (RGBI) and reconstructs it either as a dispersed point distribution or a continuous volume, depending on the chosen settings. Key parameters such as sampling density, depth mapping function and intensity falloff can be adjusted to control the degree of abstraction and spatial deformation.The resulting 3D output can be inspected in real time within the viewer and exported for downstream use in modelling or rendering workflows, forming the basis for developing and testing visualization for my design brand, CHOGA.